There are around 75 billion cells in our bodies. However, what role does each individual cell play, and how much do the cells of a healthy person differ from those of a diseased person? Analyzing and interpreting vast amounts of data is necessary in order to make conclusions. Machine learning techniques are used for this. Self-supervised learning has now been tested as a promising method for testing 20 million cells or more by researchers at Helmholtz Munich and the Technical University of Munich (TUM).

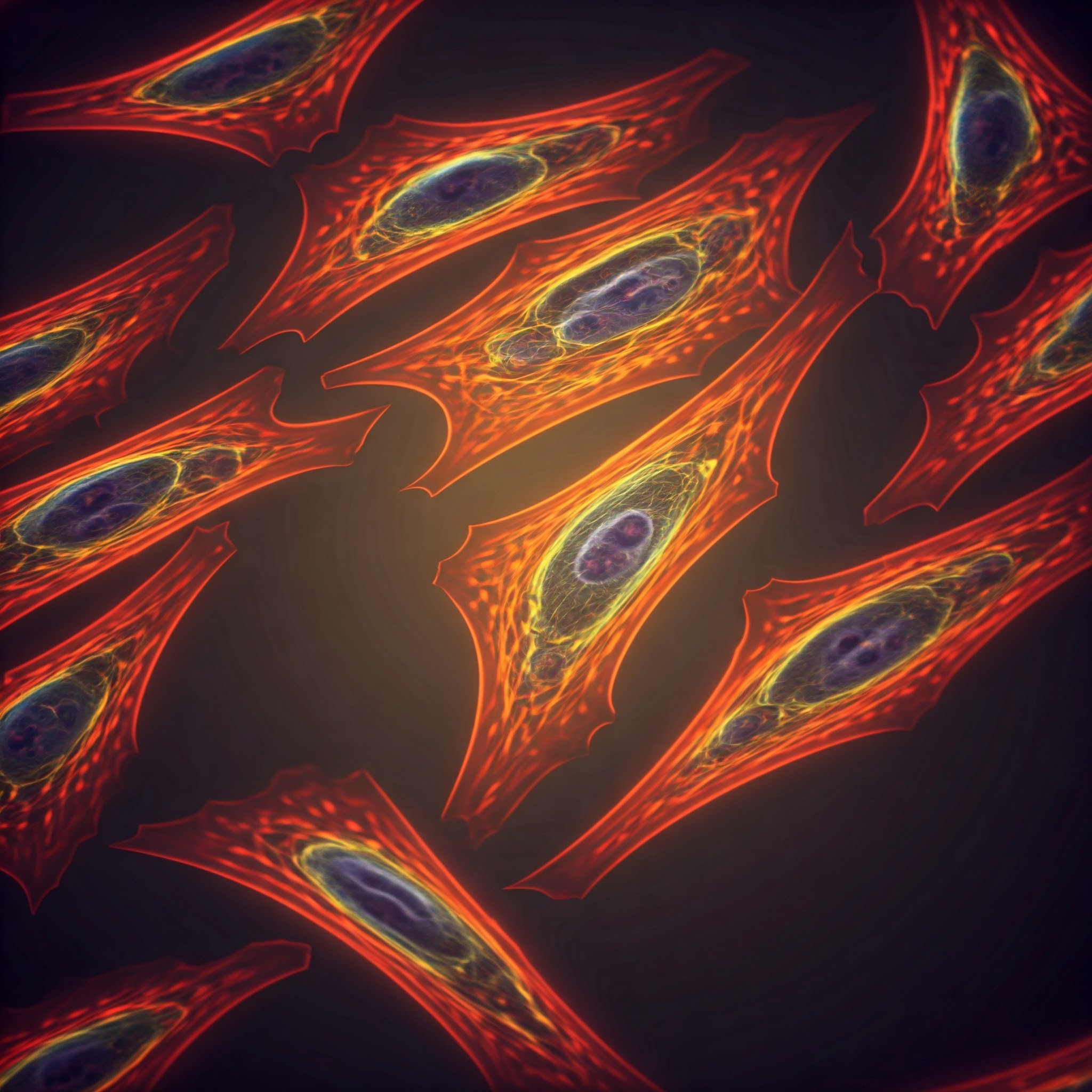

Researchers have advanced single-cell technology significantly in recent years. This enables the examination of tissue based on individual cells and the straightforward identification of the different roles played by the different cell types. For example, the technique can be used to compare with healthy cells to see how individual lung cell architectures are altered by smoking, lung cancer, or a COVID infection.

Their findings were published in the journal Nature Machine Intelligence.

Concurrently, the analysis is producing ever-increasing amounts of data. In order to facilitate the process of reinterpreting already-existing information, drawing conclusions from the patterns, and extrapolating the findings to new fields, the researchers want to use machine learning techniques.

The foundation of self-supervised learning is two techniques. As the name implies, masked learning involves masking some of the input data and training the model to be able to reconstruct the missing pieces. The researchers also use contrastive learning, which teaches the model to segregate dissimilar data and merge similar data.

The group tested over 20 million individual cells using both self-supervised learning techniques, then contrasted the outcomes with those of traditional learning techniques. The researchers concentrated on tasks like cell type prediction and gene expression reconstruction when evaluating the various approaches.

The study’s findings demonstrate that self-supervised learning enhances performance, particularly in transfer tasks, which involve evaluating smaller datasets based on knowledge from a larger auxiliary dataset. Furthermore, the outcomes of zero-shot cell predictions, or activities carried out without any prior training, are similarly encouraging. Applications with sizable single-cell data sets are better suited for masked learning, according to the comparison between masked and contrastive learning.

The data is being used by the researchers to build virtual cells. These are thorough computer models that capture the variety of cells found in various datasets. For instance, these models show promise in the study of cellular alterations associated with illnesses. The study’s findings provide insightful information about how to further refine and train such models more effectively.

Source: Technical University of Munich – News

Journal Reference: Richter, Till, et al. “Delineating the Effective Use of Self-supervised Learning in Single-cell Genomics.” Nature Machine Intelligence, vol. 7, no. 1, 2024, pp. 68-78, DOI: https://doi.org/10.1038/s42256-024-00934-3.